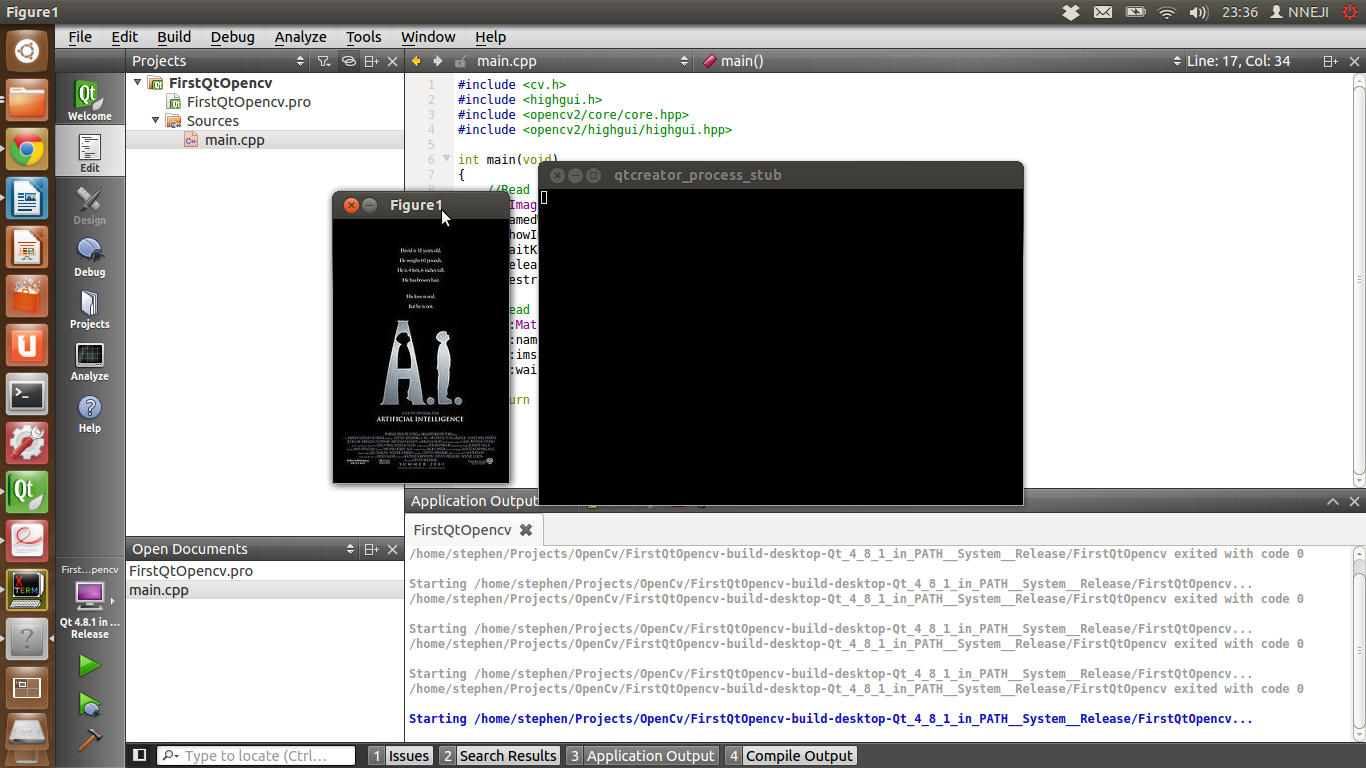

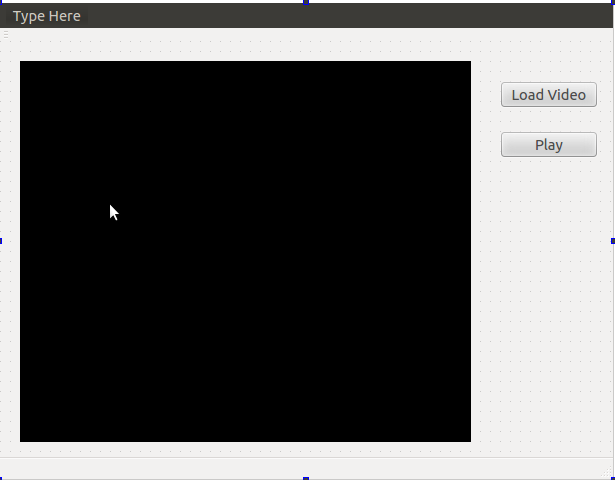

Video processing is a very important task in computer vision applications. OpenCV comes with its own GUI library (Highgui); but this library has no support for buttons and some other GUI components. Therefore it could be preferable to use a QT GUI application, but displaying a video in a QT GUI is not as intuitive as it is with Highgui. This tutorial will show you how to display video in a QT GUI, without the GUI becoming unresponsive. We would be creating a simple video player as shown below and would be programming in C++.

STEP 1: Create a new QT GUI Project

If you don't know how to do this check

out the guide

here. The guide shows you how to create an

OpenCV console project in Qt-creator, but this time instead of using a Qt

Console Application, create a Qt GUI Application. Once created the following files are automatically added into the project:

main.cpp

|

This contains the main function

which is the starting point of all C++ applications. It is the

main function the loads the main window for the GUI application.

|

mainwindow.cpp

|

This is the source file that

contains the MainWindow class implementation.

|

mainwindow.h

|

This contains the class declaration for the MainWindow class. |

mainwindow.ui

|

This is the UI designer file that could be used to tweak the GUI.

|

| <projectName>.pro

|

This contains settings that are used for the project compilation. |

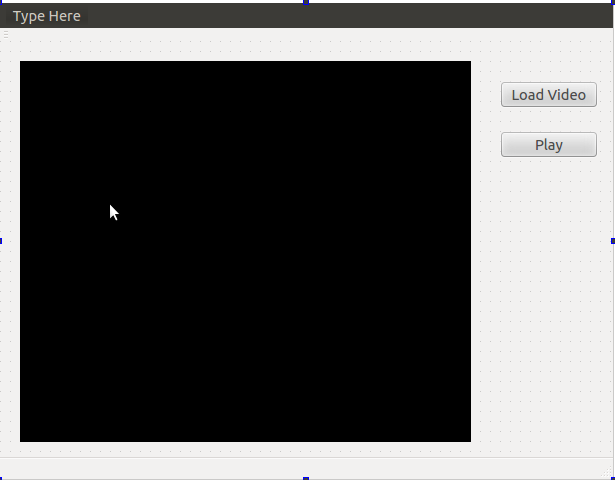

Add widgets to GUI

Open the mainwindow.ui file, this file

could be edited manually but for this tutorial we would use the

designer.

From the list of widgets on the

left of the designer, drag in a label and two pushbutton widgets.

Change the text on the first

button to “Load Video” and on the second button to “Play”.

Then Clear the text on the label. To change the text on a widget

just double click on the widget the text would be highlighted, you

can now change it and press enter when finished.

Change the background colour of the

label to a darker colour. The best

way to change the background colour of a QT widget is to use Cascading StyleSheets

or CSS. Select the label find the stylesheet property in the

property window click on the button with Three dots (ellipsis)

and add this line of CSS into the “Edit Style sheet” window and save it.

- The GUI should now look something like this:

Player Class Definition

Now we add a new class to handle our video player control, we will call this the “Player” Class the following class definition should be added in player.h

#ifndef PLAYER_H

#define PLAYER_H

#include <QMutex>

#include <QThread>

#include <QImage>

#include <QWaitCondition>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

using namespace cv;

class Player : public QThread

{ Q_OBJECT

private:

bool stop;

QMutex mutex;

QWaitCondition condition;

Mat frame;

int frameRate;

VideoCapture capture;

Mat RGBframe;

QImage img;

signals:

//Signal to output frame to be displayed

void processedImage(const QImage &image);

protected:

void run();

void msleep(int ms);

public:

//Constructor

Player(QObject *parent = 0);

//Destructor

~Player();

//Load a video from memory

bool loadVideo(string filename);

//Play the video

void Play();

//Stop the video

void Stop();

//check if the player has been stopped

bool isStopped() const;

};

#endif // VIDEOPLAYER_H

The class definition is simple and straightforward. The first thing to note is that Player class inherits from the QThread Class which will allow it to run on its own thread this is very important so that the main window remains responsive while the video is playing without this the video will cause the screen to freeze until it has finished playing. The processedImage(...) signal will be used to output the video frames to the main window (we would see how this work later).

Player Class Implementation

Here is the constructor for the Player class

Player::Player(QObject *parent)

: QThread(parent)

{

stop = true;

}

Here we simply initialise the value of the class variable stop

bool Player::loadVideo(string filename) {

capture.open(filename);

if (capture.isOpened())

{

frameRate = (int) capture.get(CV_CAP_PROP_FPS);

return true;

}

else

return false;

}

In the loadVideo() method, we use the instance of the VideoCapture class to load the video and set the frame rate. As you should already know the VideoCapture class is from the OpenCV library

void Player::Play()

{

if (!isRunning()) {

if (isStopped()){

stop = false;

}

start(LowPriority);

}

}

The public method play() simply starts the thread by calling the run() method which is an override of the QThread run method.

void Player::run()

{

int delay = (1000/frameRate);

while(!stop){

if (!capture.read(frame))

{

stop = true;

}

if (frame.channels()== 3){

cv::cvtColor(frame, RGBframe, CV_BGR2RGB);

img = QImage((const unsigned char*)(RGBframe.data),

RGBframe.cols,RGBframe.rows,QImage::Format_RGB888);

}

else

{

img = QImage((const unsigned char*)(frame.data),

frame.cols,frame.rows,QImage::Format_Indexed8);

}

emit processedImage(img);

this->msleep(delay);

}

}

In the run method, we utilise a while loop to play the video after reading the frame, it is converted into a QImage and the QImage is emitted to the MainWindow object using the processedImage(...) signal; at the end of the loop we wait for a number of milliseconds (delay) which is calculated using the frame rate of the video. If the video frame was been processed it would be advisable to factor the processing time into the delay.

Player::~Player()

{

mutex.lock();

stop = true;

capture.release();

condition.wakeOne();

mutex.unlock();

wait();

}

void Player::Stop()

{

stop = true;

}

void Player::msleep(int ms){

struct timespec ts = { ms / 1000, (ms % 1000) * 1000 * 1000 };

nanosleep(&ts, NULL);

}

bool Player::isStopped() const{

return this->stop;

}

Here is the rest of the Player class, in the destructor we release the VideoCapture object and wait for the run method to exit.

MainWindow Class Definition

#ifndef MAINWINDOW_H

#define MAINWINDOW_H

#include <QMainWindow>

#include <QFileDialog>

#include <QMessageBox>

#include <player.h>

namespace Ui {

class MainWindow;

}

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

explicit MainWindow(QWidget *parent = 0);

~MainWindow();

private slots:

//Display video frame in player UI

void updatePlayerUI(QImage img);

//Slot for the load video push button.

void on_pushButton_clicked();

// Slot for the play push button.

void on_pushButton_2_clicked();

private:

Ui::MainWindow *ui;

Player* myPlayer;

};

#endif // MAINWINDOW_H

Here is the class definition for the MainWindow class, we include the clicked event slots for both buttons and a updatePlayerUI slot. We also include a myPlayer variable which is an instance of the Player Class

Mainwindow class implementation

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow)

{

myPlayer = new Player();

QObject::connect(myPlayer, SIGNAL(processedImage(QImage)),

this, SLOT(updatePlayerUI(QImage)));

ui->setupUi(this);

}

we initialise myPlayer and we connect the signal emitted from the player class to the updatePlayerUI(...) slot, so every time a frame has been emitted it would be passed to this slot.

void MainWindow::updatePlayerUI(QImage img)

{

if (!img.isNull())

{

ui->label->setAlignment(Qt::AlignCenter);

ui->label->setPixmap(QPixmap::fromImage(img).scaled(ui->label->size()

Qt::KeepAspectRatio, Qt::FastTransformation));

}

}

The updatePlayerUI slot receives a QImage and resizes it to fit the label (keeping the aspect ratio) which will be used to display. It displays the image by setting the label pixmap

MainWindow::~MainWindow()

{

delete myPlayer;

delete ui;

}

void MainWindow::on_pushButton_clicked()

{

QString filename = QFileDialog::getOpenFileName(this,

tr("Open Video"), ".",

tr("Video Files (*.avi *.mpg *.mp4)"));

if (!filename.isEmpty()){

if (!myPlayer->loadVideo(filename.toAscii().data()))

{

QMessageBox msgBox;

msgBox.setText("The selected video could not be opened!");

msgBox.exec();

}

}

}

void MainWindow::on_pushButton_2_clicked()

{

if (myPlayer->isStopped())

{

myPlayer->Play();

ui->pushButton_2->setText(tr("Stop"));

}else

{

myPlayer->Stop();

ui->pushButton_2->setText(tr("Play"));

}

}

This is the remaining part of the MainWindow Class, we have the destructor for the class, "load Video"(pushbutton) button slot and the "Play" (pushbutton_2) button slot which all pretty straightforward.

Main() Function

int main(int argc, char *argv[])

{

QApplication a(argc, argv);

MainWindow* w = new MainWindow();

w->setAttribute(Qt::WA_DeleteOnClose, true);

w->show();

return a.exec();

}

And finally, the main function we create an instance of the

MainWindow class and set the delete on close attribute so that the objects created are destroyed.

Final words...

This is just a simple tutorial to help anyone get started with videos in OpenCV and QT. It should also be noted that there are other ways to handle videos in QT like the

Phonon multimedia framework. Please let me know if this was helpful and ask questions (if any) in the comments. Happy Coding!

UPDATE: SEE PART 2 OF THIS TUTORIAL HERE. WE SHOW YOU HOW TO ADD A TRACK-BAR TO ALLOW THE USER CONTROL THE VIDEO.